Automated machine studying, or AutoML, has generated loads of pleasure as a pathway to “democratizing knowledge science,” and has additionally encountered its justifiable share of skepticism from knowledge science’s gatekeepers.

Complicating the dialog even additional is that there isn’t a commonplace definition of AutoML, which may make the talk extremely troublesome to observe, even for these well-versed.

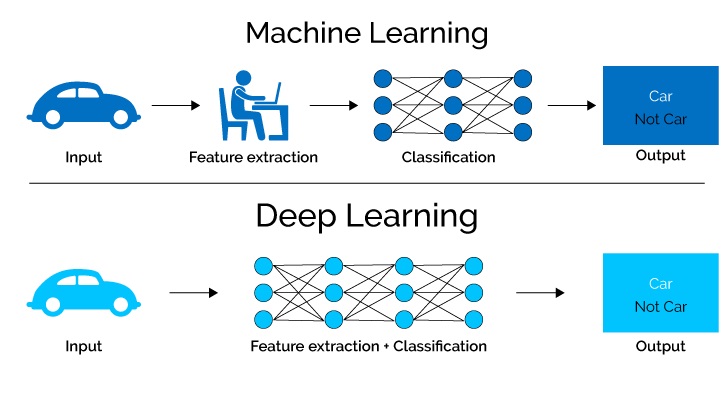

The objective is easy sufficient: By embracing a brand new AI mindset and automating key parts of algorithm design, AutoML could make machine studying extra accessible to customers of varied stripes, together with people, small startups, and enormous enterprises. Extra particularly, AutoML could make deep studying, machine studying’s extra advanced subset, accessible to knowledge scientists, regardless of its extra sophisticated nature.

Certainly, Pink Hat is the main Linux-based supplier of enterprise cloud infrastructure. It’s been adopted by 90 % of enterprises and has greater than 8M builders. Its OpenShift expertise is a key part of its success, because it gives a solution to simply deploy multi-cloud environments by a full stack management and administration functionality constructed on prime of business normal Kubernetes and deployed in a digital Linux stack.

That’s a pretty worth proposition – one which guarantees unprecedented effectivity, value financial savings, and income creation alternatives. It additionally helps clarify why the AutoML market will develop astronomically within the coming years. In line with a forecast by P&S Intelligence, the worldwide AutoML market is on tempo to develop from $269.6 million in 2019 to greater than $14.5 billion by 2030 – advancing at a CAGR of over 40%.

However what’s going to fall below the umbrella of this quickly surging market? There isn’t a neat-and-simple reply. As an alternative, to higher perceive AutoML, we must always look at it as a spectrum, and never as black and white as both absolutely autonomous or absolutely handbook. Contemplate the automotive business, the place autonomy is break up into completely different ranges, starting from Degree 1 (driver help know-how) to Degree 5 (absolutely self-driving vehicles, which stay a far-off prospect). Pondering of AutoML on this method serves as a helpful reminder – constructing automated AI fashions isn’t an all-or-nothing proposition. Right here’s a more in-depth take a look at the graduated scale that’s redefining the AI pipeline.

Degree 0: No Automation

By definition, absolutely handbook deep studying processes depend on the skillsets of information scientists and different specialists to hold out key processes, together with programming neural networks, dealing with knowledge, conducting structure searches, and so forth.

The extent of talent required to execute these duties is formidable, which helps clarify why deep studying (together with the costly expertise required to implement it) has confirmed elusive to many organizations.

Degree 1: Excessive-Degree DL Frameworks

Whereas manually implementing DL from scratch poses many obstacles, the accrued work of DL programmers and knowledge scientists has led to the creation of high-level frameworks like Caffe, TensorFlow, and PyTorch, which offer DL fashions and pipelines for customers to put in writing their very own networks and extra.

Simply as Degree 1 autonomous driving, which encompasses Superior Driver Help Programs (ADAS), has delivered substantial advantages to bizarre drivers whereas nonetheless being a far method off from full autonomy, these high-level DL libraries are making DL pipelines less complicated and extra environment friendly. Implementation nonetheless requires a excessive diploma of experience in programming and DL, but it surely doesn’t require PhD-level knowledge science experience, making it rather more accessible to many organizations.

Degree 2: Fixing Set Duties

Leveraging pre-trained fashions alongside switch studying yields an much more automated course of.

This stage of automation builds upon the provision of skilled fashions like open supply repositories and labeled knowledge, that are then fine-tuned to resolve a given downside. Once more, this stage doesn’t obviate the necessity for knowledge experience; it depends upon engineers to pre-process knowledge and tweak the mannequin in keeping with the duty at hand.

Degree 3: AutoML with NAS

Neural structure search (NAS) is an rising area by which algorithms scan hundreds of accessible AI fashions to yield an acceptable algorithm for a given process. Put one other method, AI is delivered to bear to construct even higher AI.

Whereas NAS has been the unique protect of Large Tech corporations like Google, Fb, and main educational establishments like Stanford College, additional innovation within the area will beget larger scalability and affordability, opening a variety of extremely useful functions – together with extra subtle evaluation of medical photographs, as an example.

Degree 4: Full Automation

When the deep studying pipeline is absolutely automated, meta-models will set the parameters wanted for a given process. Given coaching knowledge, the meta-model can invent the best architectures wanted for the duty at hand, in addition to supply prior data on the structure hyper-parameters.

Though full automation remains to be a number of years off, working towards meta-models will ship important beneficial properties in effectivity and class even on the decrease ranges of autonomy (a lot as innovators working to finally develop self-driving vehicles have already rolled out enhancements to automotive know-how). As a result of every stage of autonomy builds on the opposite, NAS fashions will play an necessary function to find the best meta-models to run in every use case.